Invented by HOFFMANN; Oliver, DIERKES; Frank

Automated cars need to know exactly what is around them. But data can be old or wrong. This article will help you understand a new way to check if the information that tells a car about its surroundings is true or not. We will explain why this matters, what science and ideas came before, and what is special about this new invention.

Background and Market Context

Self-driving cars are becoming more common each year. These vehicles need to make quick and safe decisions. If a car can drive by itself, it must really “see” the world around it. This way, it can stay in its lane, stop at red lights, and avoid accidents. To do this, cars use sensors such as cameras, radar, and lidar. These sensors give clues about what is nearby—like the road, signs, and other cars.

But sensors are not perfect. Sometimes a camera might not see a lane marking because the sun is shining in the wrong way. Radar might miss a small object. Lidar might not work well in rain or fog. On top of that, cars also use digital maps, data from other cars, and sometimes information from the internet. This extra data is called “predefined data.” It can tell a car about the road ahead, speed limits, or even where construction is happening.

The problem is that not all this data is always correct. Maps may be out of date. Data sent from other cars can have errors. Sometimes, the data might not match what is actually on the road. If a car trusts the wrong information, it could make a bad decision. This is a big problem, especially when cars are driving themselves and people are trusting them.

The car industry uses a system called ASIL, which stands for Automotive Safety Integrity Level. ASIL helps make sure that cars are as safe as possible. The higher the level, the stricter the safety rules. Fully self-driving cars (called Level 3 or higher) must meet the strictest ASIL requirements. If the data the car uses is not reliable, it cannot meet these safety rules.

Car makers, software companies, and safety experts are all looking for ways to make sure these cars use the right information—even if the data comes from sources that are not always up to date. The market for self-driving cars is huge, but only if people believe that these cars will keep them safe. That is why checking if the information the car uses is correct is so important.

In summary, the market needs a way for cars to double-check their information before using it for important decisions. The invention we discuss here is designed to solve this problem by using smart checks with sensors and computer logic, making self-driving cars safer and more trustworthy.

Scientific Rationale and Prior Art

Before looking at the new invention, let’s see how cars have tried to solve this problem in the past. Self-driving cars already use many tricks to figure out what is around them. Often, they use a mix of digital maps, sensors on the car, and sometimes information from other cars. All this data is put together to help the car understand where it is and what is nearby.

Earlier ideas mostly worked by comparing what the car’s sensors see with what the map says. For example, a system might use the car’s GPS and compare it with lane markings in a camera image to figure out the car’s exact position. Some systems compare lane markings from maps and from real-time camera images to make sure they match. Others try to use radar or lidar with map data to check if the road layout is the same.

One known method is to use a digital map to predict where the lanes are, then use a camera to find lane markings and make sure the two sources agree. If they don’t match, the car knows something might be wrong. Other ideas use swarm data—this is information sent from many cars on the road. By combining what many cars see, the system tries to get a more accurate picture of the road.

There are also methods that use computer models (like neural networks) to predict the road layout or traffic situations. Some ideas use machine learning to guess what might happen next, like how a car ahead might move or if a new lane is opening up.

However, all these old ideas have some problems. Many of them depend on just one type of sensor or just one kind of data. Some only use maps and cameras, or only radar and map data. If the map is wrong or the sensor misses something, the car might not notice. Most past systems do not check if the data is safe enough for the highest safety levels needed for self-driving cars.

Another problem is that sometimes a sensor can’t see a part of the road because another car is blocking the view. Or, if there is fog or heavy rain, some sensors might not work well. The car needs to know when it can and cannot trust its own sensors.

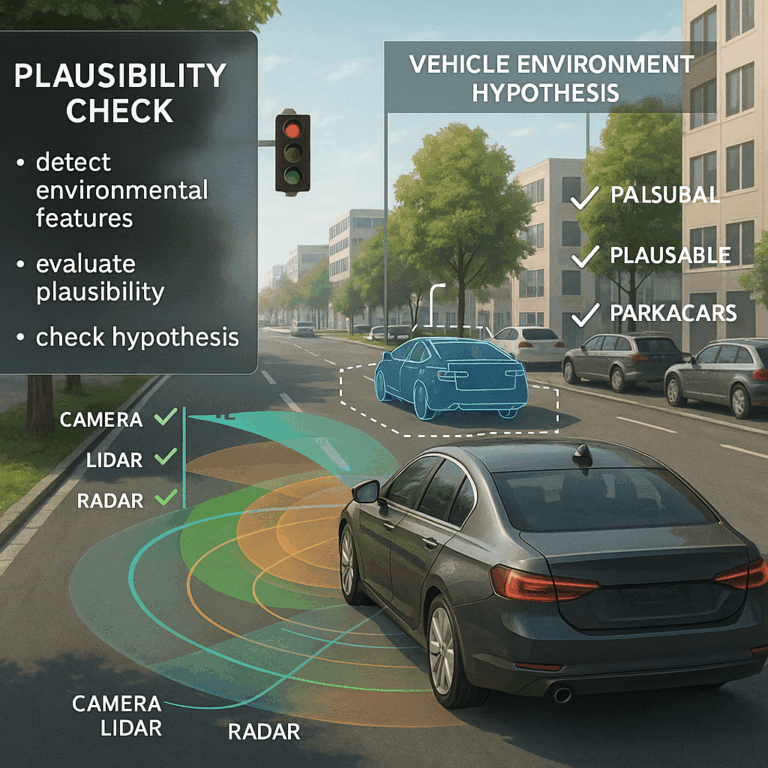

The new invention builds on these ideas but goes further. It does not just compare data from one sensor with a map. Instead, it uses a mix of different sensors (like camera, radar, and lidar) and checks if each piece of data meets special rules, called plausibility criteria. It also considers if the sensors could actually see the part of the road being checked. This makes the system smarter about when to trust or not trust the data.

In summary, while older methods tried to solve the problem of bad data by simple comparisons, they often missed important details or did not reach the high safety needed for self-driving cars. The new invention aims to fix these gaps by using multiple sensors, checking each one’s ability to see, and using smart rules for each situation.

Invention Description and Key Innovations

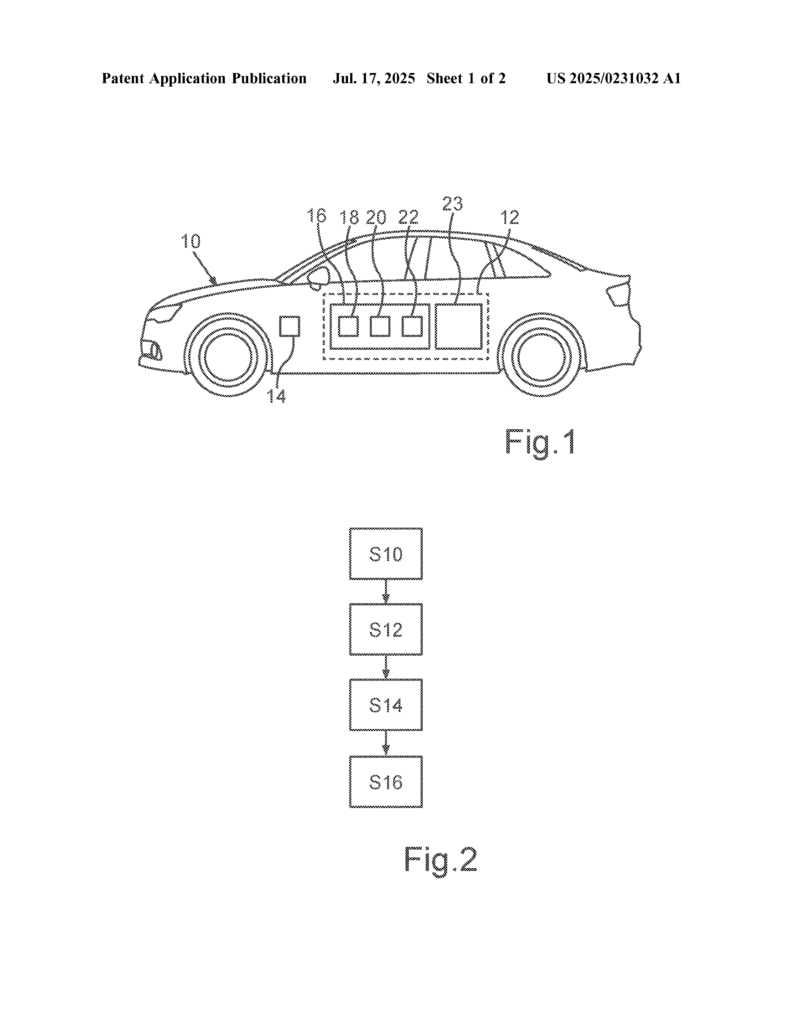

Now let’s look at what makes this invention special and how it works. The main idea is to create a method and a system that can check if what the car believes about its environment is actually true, before using that information to make driving decisions.

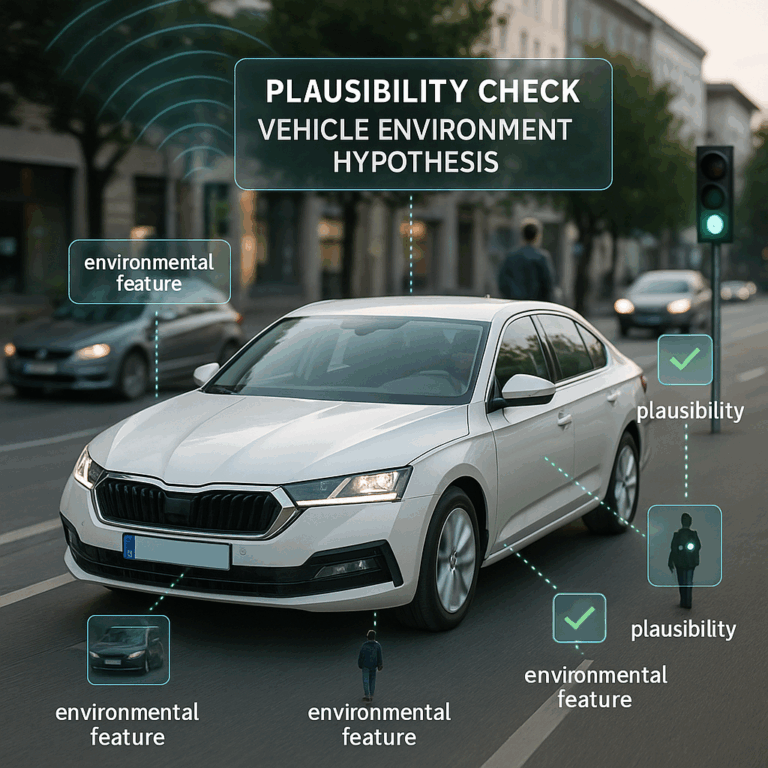

The process starts with a “vehicle environment hypothesis.” Think of this as an assumption about something around the car—like how many lanes are on the road, where the lanes are, or if there is a construction zone ahead. This hypothesis comes from sources like digital maps, data from other cars, or from computer models using artificial intelligence.

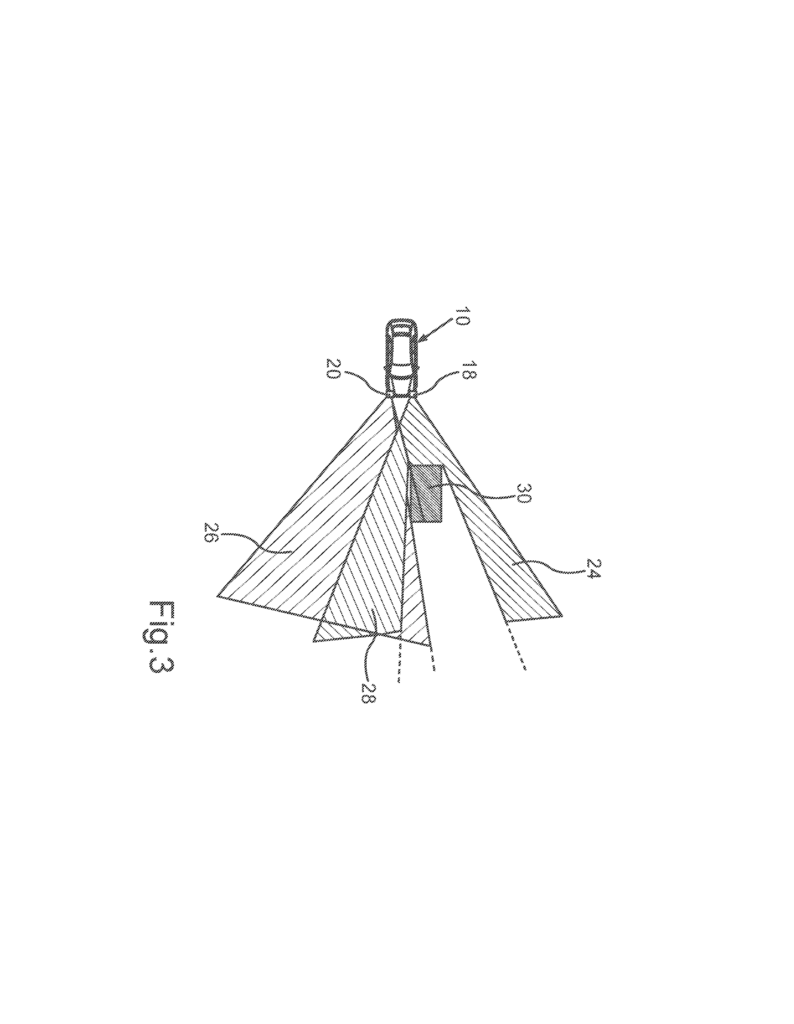

Next, the car uses its sensors to look at the real world. It could be a camera spotting lane markings, a radar sensing cars or barriers, or a lidar measuring the shape of the road and objects. Each sensor collects what are called “environmental features.” These are things like road signs, lane markings, guardrails, or other vehicles.

The key step is checking each environmental feature to see if it supports or challenges the environment hypothesis. This is done using plausibility criteria. These are special rules that depend on what kind of feature it is (like a lane marking or a sign) and which sensor found it. For example, the system may require that if a camera sees a lane marking, the marking must be within a certain distance and have a certain shape to count as a match. If radar sees a guardrail, it should be in the expected position. Each sensor and feature combination can have its own rules.

An important and unique part of the invention is that it checks not just what the sensors see, but also what they could see. If a sensor cannot see a certain part of the road because it is blocked or out of range, the system knows not to count missing data as a negative. This is called considering the “field of vision” of each sensor. It takes into account where each sensor is placed on the car, its angle, and its range. If a certain area is blocked, like by another car or a wall, the system notes that this sensor could not check that part.

The system also combines the results from different sensors. If at least two different environmental features, from different sensors, confirm the hypothesis with enough certainty, the system can say the hypothesis is plausible. This reduces the chance of mistakes from relying on just one sensor or one type of data.

Another smart part is that the plausibility criteria can be set using computer simulations. This allows the system to be tested in many fake situations to find the best rules for checking each feature. The system can also use artificial intelligence to improve how it checks the data, learning from past experiences.

Once all this checking is done, the system creates a kind of report, showing which sensors and features supported the hypothesis, which ones could not check it, and which ones disagreed. This report can use confidence values to show how sure the system is about its decision.

If the hypothesis is confirmed, the data can be used for important driving tasks, like keeping the car in its lane or following the right speed limit. If the hypothesis is not confirmed, the data is marked as unreliable and not used for critical decisions.

This invention is not just a method, but also a system that includes the sensors, a computing device to process the data, and the logic to make these smart checks. It can be built into any car, especially self-driving cars or trucks, and can work with different types of vehicles.

By using this method, car makers can take data from less-safe sources (like old maps or crowd-sourced information) and upgrade it to meet the highest safety levels. This makes self-driving cars both smarter and safer, and helps build trust with people who use them.

Conclusion

Self-driving cars need to make safe choices every second. The new invention explained here is a big step forward in making sure the data these cars use is always checked and confirmed before it is trusted. By using many sensors, smart rules, and checking each sensor’s ability to see, this system makes self-driving cars safer and more reliable. As the world moves closer to automated vehicles, inventions like this will be key to keeping everyone safe on the road.

Click here https://ppubs.uspto.gov/pubwebapp/ and search 20250231032.