Invented by Hou; Tingbo, Grundmann; Matthias

Video calls are now a part of everyday life. Whether you work from home, take online classes, or just want to chat with friends, you use video conferencing tools. But what if you don’t want everyone to see your messy bedroom or the busy street behind you? This is where video segmentation comes in. It helps you show only yourself on camera and swap your background for something more fun or private.

A new patent application promises to make this technology much better, faster, and available to everyone—even right inside your web browser. Let’s explore what this invention is about, how it fits into the market, the science behind it, and what makes it truly special.

Background and Market Context

People want privacy, professionalism, and creativity when they join video calls. With more people working remotely and meeting online, video conferencing tools are everywhere. Services like Zoom, Microsoft Teams, and Google Meet let people talk face-to-face from anywhere. But sometimes, your real-world background is not what you want to show.

That’s why virtual backgrounds have become so popular. They let you hide your actual room and show something else—a clean office, a fun beach, or even a simple blur. This helps you look more professional, stay private, or just have fun. But making virtual backgrounds work well is not easy. It means finding you (the subject) in each video frame and separating you from everything else behind you. This is called “video segmentation.”

Most video segmentation tools today are built into apps you have to download and install. Some work only on certain computers or phones. And often, the quality is just okay. Sometimes, your hair gets cut off, your hands disappear, or the background flickers. This happens because the computer needs to figure out which pixels belong to you and which to the background, and doing this well takes a lot of computer power.

Now, people want these features to work right inside their web browser. No more downloading big apps or worrying about updates. Just open a web page and join a meeting. But browsers have their limits. They don’t always have direct access to all the power of your computer, and running complex models in a browser has been hard.

This is where the new patent comes in. It promises a way to bring high-quality video segmentation to the web. This means better backgrounds, smooth video, and privacy, all without extra apps. With work-from-home and online learning becoming normal, this technology is set to become a must-have for everyone using video calls.

Scientific Rationale and Prior Art

The key challenge in video segmentation is to separate the person from the background, frame by frame, in real time. To do this, computers use something called “image segmentation.” This is a task in computer vision where the computer decides which parts of an image belong together. For video calls, the goal is to find the person in every frame and mask out the rest.

Older methods used simple tricks, like looking for movement. If the background stayed still and only the person moved, it could work. But this breaks down if things in the background move or if the lighting changes. Some tools used “chroma key” (like green screens), but that needs special backgrounds and is not practical for everyone.

Modern segmentation uses machine learning. Here, computers “learn” what people look like by looking at lots of pictures. They build models—like neural networks—that can spot a person in any scene. Once trained, these models can be used to quickly analyze new images and make a “mask” that says which pixels are the person and which are not.

In recent years, powerful models like U-Net, MobileNet, and EfficientNet have made segmentation faster and more accurate. These models are compact enough to run on regular computers and even phones. Some companies have built their own segmentation into their apps, but these are often tied to their own software and not available in the browser.

Browsers have started to catch up. With new technologies like WebGL and WebGPU, browsers can use your computer’s graphics card (GPU) to run complex models quickly. But not many solutions fully use this power, and most segmentation in browsers is still slow or low-quality.

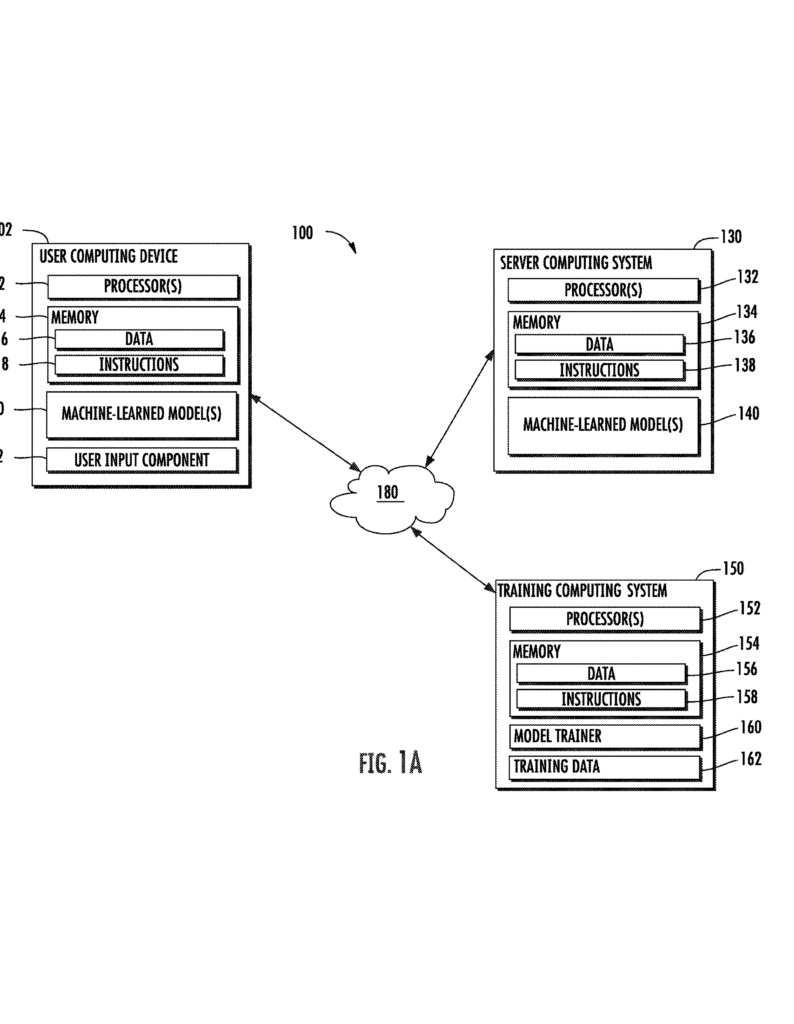

The patent application builds on this progress. It brings together advances in machine learning models, browser technology, and cloud computing. The result is a way to transmit only the needed software to your browser, use your device’s own GPU for fast processing, and deliver high-quality segmentation in real time.

Earlier inventions focused on either in-app solutions (requiring downloads) or cloud-based processing (which can be slow and uses lots of internet bandwidth). This new approach does the heavy lifting right in your browser, keeping your data private and the video smooth. It also lets you use virtual backgrounds or blur effects with much more detail—like showing every strand of hair or the edges of headphones.

Invention Description and Key Innovations

Let’s break down what this new patent covers and what makes it stand out.

At its heart, the invention is a system for web-based video segmentation. It uses a smart mix of software, machine learning, and browser features. Here’s how it works in simple terms:

$IMAGE_LIST_4

When you join a video call in your browser, the system downloads a small software package from a server. This package includes a trained machine learning model that knows how to spot people in video frames. The model is designed to be lightweight but powerful, often based on new versions of compact neural networks like MobileNetV3 or U-Net.

Your camera captures video frames. Each frame is sent through this model, right on your computer. The model analyzes the image, finds you, and creates a “mask” that separates you from the background.

Now comes the magic: the system takes this mask and creates a new image. You stay in the frame, but your background is replaced with whatever you want—a photo, a video, a blur, or even a cartoon world. This new, augmented image is then shown in your browser and sent to other people on the call.

What makes this system different? For one, it runs inside your browser using your device’s GPU for speed. This means you don’t need to install anything, and everything happens locally. Your original video never leaves your computer unless you want it to. Only the final, augmented video is sent out, keeping your information private.

The invention’s segmentation model is designed for both speed and quality. It uses blocks called encoders, bottlenecks, and decoders. The encoder takes in the image and finds features at many levels—like the outline of your head, your hands, and other details. The bottleneck block makes quick, rough predictions, and then the decoder refines these predictions to get sharp edges and fine details.

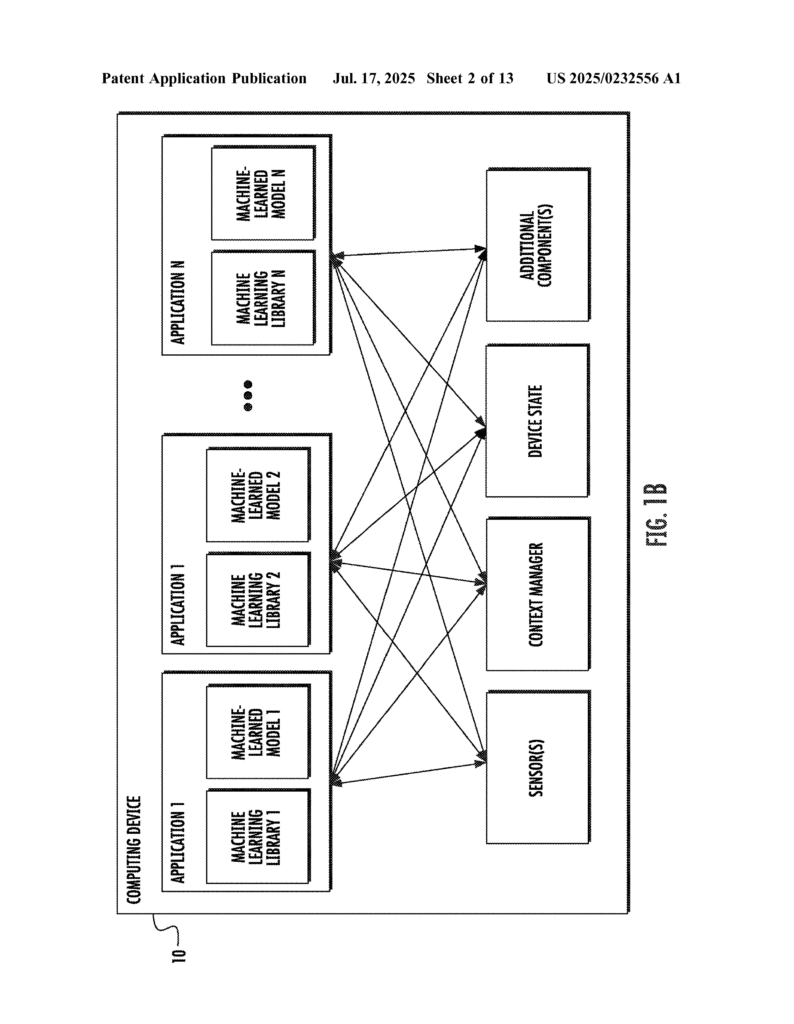

The model can handle high-definition video, keeping up with real-time calls. It can even work on different types of devices, from laptops to tablets, as long as the browser supports modern GPU features.

Another key part of the invention is how the model is delivered and updated. Instead of shipping a huge app, the server sends only what’s needed. If the model gets better or new features are added (like better handling of pets or objects), the next time you join a call, you get the latest version. This makes it easy to keep things up-to-date and secure.

The patent also covers how the system can send the augmented video to others on the call and receive their video streams to display. It’s designed to fit smoothly into existing video conferencing services, making it easy for companies to add high-quality virtual backgrounds to their web apps.

On a technical level, the model uses tricks like channel expansion, depthwise convolution, and channel compression to process images quickly. It can use different kinds of neural network layers, attention mechanisms, and upsampling to get the best results. The system can even store the model in your browser for later use, saving time and bandwidth.

Because the model runs locally, it can respond instantly to changes—like if you move, wave your hand, or pick up an object. The segmentation is sharp enough to handle fine details, so you don’t see jagged edges or missing parts. This is a big step up from older browser-based tools, which often looked choppy or slow.

Finally, the patent hints at future uses beyond video. The same technology could be used to improve audio—like removing background noise or muting unwanted sounds. It could also be used for other types of media, making the system flexible for many kinds of web applications.

Conclusion

This patent application is more than just a new way to do virtual backgrounds. It’s a big leap forward for privacy, convenience, and video quality in web-based video calls. By bringing fast, accurate video segmentation right into the browser and using the power of your device’s GPU, it makes high-definition virtual backgrounds available to anyone, anywhere, without downloads or complicated setup.

As remote work, online learning, and digital communication keep growing, tools like this will become even more important. If you want to stand out on your next video call, keep your background private, or just have fun, this technology may soon be powering your experience—making every call clearer, smoother, and much more user-friendly.

Click here https://ppubs.uspto.gov/pubwebapp/ and search 20250232556.