Hardware is hard—but it is not vague. Even before you ship thousands of units, you can track simple numbers that prove real progress. You do not need a big factory or a giant budget. You need clear signals that show your device works in the field, can be built again, and will earn trust from buyers. The right metrics turn early parts, small pilots, and lab days into a strong story investors believe.

This guide gives you those signals. We’ll keep it plain and practical. You’ll learn how to measure readiness, reliability, manufacturability, safety, and speed to dollars—without bloated dashboards. We’ll show what to log, how to plot it, and how to present it so a partner can retell your story in one slide. We’ll also show where IP fits, so the methods behind your gains become assets you own.

If you want a hands-on partner for the IP side, Tran.vc invests up to $50,000 in in-kind patent and IP services for robotics, AI, and deep tech teams. We help you build a moat while you build your metrics. Apply anytime at https://www.tran.vc/apply-now-form/.

Prototype Readiness You Can Defend

Before big orders, you need a clear signal that your device is real, safe, and repeatable. A “readiness” metric turns messy build notes into one view a buyer and an investor can trust. Keep it simple. Tie it to what a field user feels, not to lab pride.

Define a single readiness line

Write one sentence that says what “ready” means for this device today. Keep it tied to the job, not the lab bench. “Runs eight-hour shift at line speed with no manual re-teach.” This keeps your team honest and makes your updates easy to read.

Break “ready” into three plain parts you can measure: core function, safe behavior, and stable power/thermals. If any fails, the unit is not ready. You still log the run, but you do not claim readiness. This hard line builds trust.

Freeze the build state for each test. Note firmware hash, BOM rev, and enclosure version in your log. When a unit passes, you know exactly which mix passed. When it fails, you know exactly what changed. That is how progress stays real.

Measure with runs, not opinions

Run the device in a scene that looks like a normal shift. Use the real sensor, the real speed, and the real light. If your buyer has a daily warmup, include it. If they power-cycle during breaks, include that too. Reality makes small wins feel big.

Record time-to-first-use, time-between-errors, and safe-recovery time. These three clocks tell most of the story. Screenshots are nice, but clocks are proof. Clocks also help you catch regressions when new code lands.

Repeat the same run three days in a row. Readiness is not one lucky day. Steady performance across short, repeated windows is a clean pre-seed signal investors understand.

Present in one calm frame

Show one number at the top: “Readiness: 2 of 3 units passed full-shift run (Rev B enclosure, FW 0.9.4).” This reads like control. Then show a tiny chart with the three clocks for each unit. The eye should see which unit dragged and why.

Add one dated label for the change that lifted the pass rate. “Fan curve tuned,” or “added dry-run guardrail.” Tie words to the clock that moved. Cause and effect is the tone you want in the room.

Close with the next constraint you will add. “Next: heat soak chamber at 40°C.” A steady ladder of bounds shows you are raising the bar on purpose, not chasing luck.

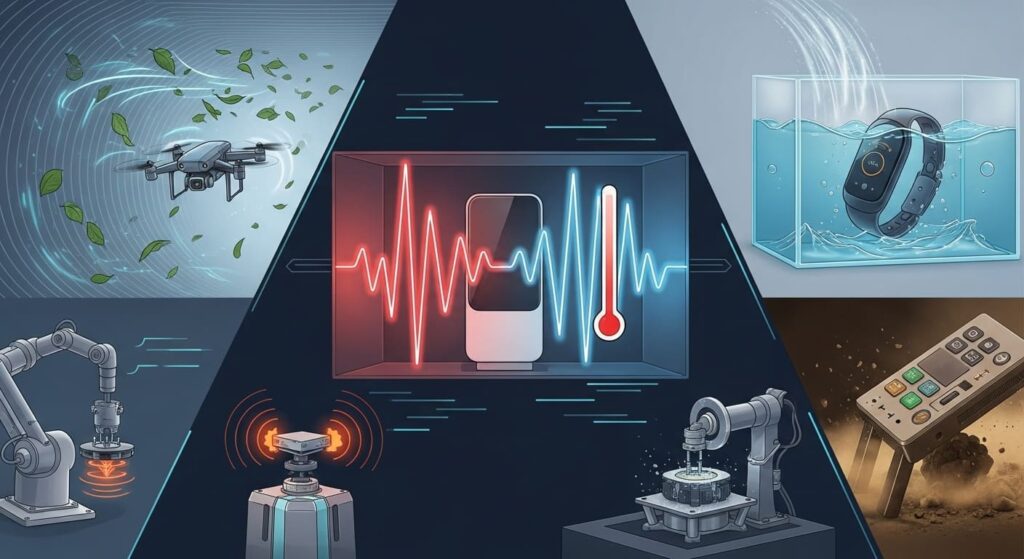

Reliability Under Real Conditions

Reliability is not a vibe. It is a handful of failure modes you can name, trigger, and fix. When you log those modes with simple, repeatable scenes, your reliability metric becomes a quiet, powerful proof—even at ten units.

Choose scenes your buyer actually hits

Write three daily moments your device must survive. A hot afternoon in a metal shed. A dusty intake after two hours. A shift change with a rough power cycle. Keep scenes boring and real. Flashy stress tests can wait.

Set the same input bounds each time. Same ambient temp, same line speed, same input mix. If you need a chamber or a rig, build the smallest one that mimics the field. Tight bounds make your charts honest and your fixes transferable.

Explain why each scene matters in one short paragraph. “Dust raises false rejects; this scene runs two hours without cleaning to match shift pattern.” This context stops side debates and protects your calendar.

Instrument failure the same way, every time

Give each failure a short name and a cause tag. “HOT-THROTTLE,” “DUST-OPTICS,” “BROWNOUT-BOOT.” Names beat paragraphs when you are tired. Tags make patterns pop in a week.

Log time-to-failure and time-to-recover for each scene. Add a note for whether the device chose the safe path (defer, slow, stop) and whether an operator intervened. This separates safe annoyance from risky behavior.

Re-run the same scene after every fix. Do not change the scene and the code at once if you can avoid it. One lever at a time keeps learning fast and proves the fix actually fixed the thing.

Turn each fix into a durable asset

Write the fix like a method: inputs, checks, actions, fallbacks. Keep code details out. Field steps in plain order are easy to reuse and review. They are also the backbone of strong IP claims.

If your recovery logic under heat or dust is novel and practical, file a narrow provisional. A safe, fast fallback that others do not have is a real moat in hardware. It also reads well in your deck next to a reliability chart.

Track the same failure name across revs. “DUST-OPTICS: 14 → 6 events after baffle, 0 after purge preset.” Dates under those arrows say more than a page of adjectives.

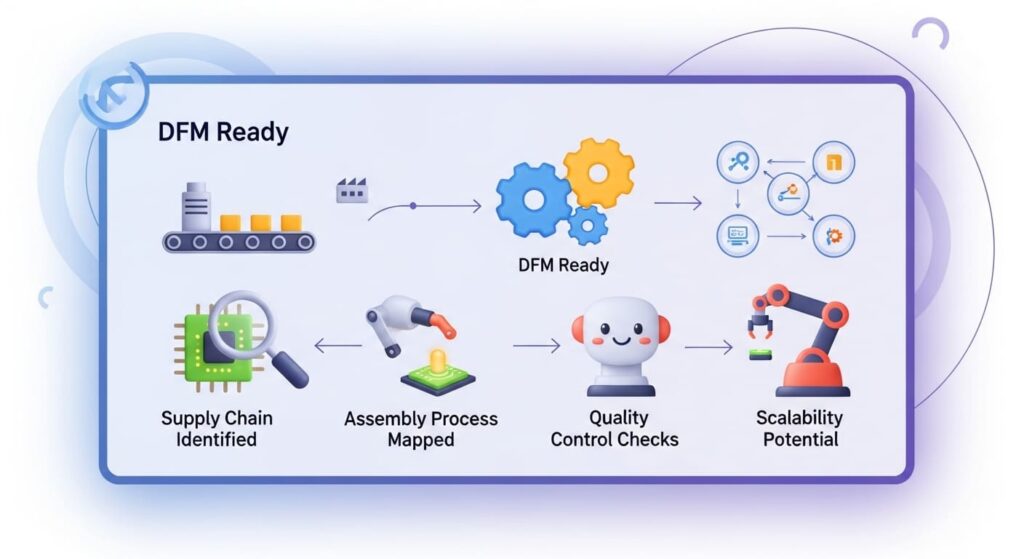

Manufacturability You Can Show on One Slide

You do not need a factory to prove you can build again. You need a small, clean metric that shows stable assembly, stable parts, and a rising first-pass yield. This tells a buyer you will not crumble when they ask for twenty units.

Define first-pass yield for the stage you are in

Pick the build stage you can repeat this month—EVT, DVT, or a pilot jig. Define first-pass yield as “units that pass all functional tests without rework at this stage.” Do not hide rework. Count it. Your credibility rests on this discipline.

List the few functional tests that matter to the job—optical alignment window, motion backlash, thermal soak. Keep the tests short and consistent. If a test is flaky, fix the test before you chase phantom defects.

If you use contract assembly, mirror the same definition with the vendor. Shared definitions prevent “your pass, my fail” arguments that waste weeks.

Measure build time and variance, not just outcome

Log total hands-on assembly time per unit and the variance across a batch. High variance is a manufacturability smell; it means hidden steps or skill sensitivity. Investors read variance as risk. Buyers do too.

Note “touches” that slow you down—adhesive cures, torque steps, cable routes. When you cut a touch, note the date and the new time. Time drops tied to specific changes are powerful pre-seed signals.

Photograph each station with the tool and torque spec in frame. These tiny artifacts let a partner believe your time claims and help vendors quote with fewer surprises.

Present yield and “defect killers” together

Show first-pass yield by batch with three dates where it rose. Label each rise with the change that made it happen. “Jig pin added,” “pre-crimp harness,” “optic spacer shim.” Keep labels literal.

Under the chart, add one line on rework time per unit. When rework falls, your story sounds like margin, even before revenue. Time is money in hardware, and time curves are easy to trust.

If a jig, spacer, or alignment method is new and repeatable, document it and consider filing. Production tricks that lock in yield can be protectable, and they anchor your cost story as you scale. Tran.vc helps teams turn these “defect killers” into claims while you keep building—apply at https://www.tran.vc/apply-now-form/.

Safety and Compliance, Made Practical

Safety is not just a lab stamp. It is a set of behaviors your device shows under stress. At pre-seed, your metric should prove the behavior is already real, and your path to formal marks is short and mapped.

Write the safety boundary in plain words

Say what the device senses, what it decides, what it controls, and what it never does. Boundaries reduce fear and speed reviews. Buyers and investors relax when edges are clear.

List the top hazards for your wedge and the safe default for each: slow, stop, or manual confirm. Keep sentences short. People remember defaults more than diagrams.

Map each hazard to a short clip from your bench, rig, or shadow run. Visual proof beats a paragraph, especially when rooms are busy.

Measure guardrail behavior as a number

Count “guardrail events per eight-hour run” in each scene and the share that resolved safely without operator action. This turns safety into a simple ratio you can lift with engineering.

Log time-to-safe-state and time-to-resume. These two clocks show you protect people and uptime at once. A rising safe-resume rate is a strong slide in robotics and lab tools.

When the ratio improves, note the change that drove it. “Confidence cue added,” “fallback path tuned,” “threshold learned per station.” Tie cause to effect so your safety story feels earned, not lucky.

Put compliance on a short, dated path

List the exact standards you plan to meet for your wedge. Note the lab you will use, the pre-check you already ran, and the gaps you closed with dates. Timelines beat badges at this stage.

If a novel arbitration or sensing method made those pre-checks pass with less hardware or lower power, write the steps and consider filing. Safety logic that buyers trust is hard to copy; protecting it makes your lift durable.

Share a one-page safety note in your data room: boundary, defaults, two clips, and the standard path with dates. Rooms respect short, clear safety proof more than heavy binders.

Supply Chain Readiness That Calms Rooms

At pre-seed, you do not need volume POs. You need to show that parts exist, vendors respond, and lead times are falling with each rev. A small “readiness” view here removes a major doubt for both buyers and investors.

Define the few parts that set the pace

Name the three long-lead items that gate your build: sensor, compute, and a precision mechanical. Everything else bends to these. Your metric will follow them.

For each, record current lead time, MOQ, and at least two qualified sources or drop-in alternates. If an alternate needs a small adapter, note it and the week you can build it.

Set a simple target: “two sources or one source plus validated alternate” per long-lead part. This rule is easy to explain and easy to track each Friday.

Measure vendor response and yield at small scale

Track quote response time and sample delivery time for each vendor. Speed now predicts speed later. Vendors who move in days at ten units tend to move in weeks at hundreds.

Run a small incoming QC on samples: basic dims, optical SNR, compute thermals. Log pass/fail and time-to-replace. These logs pay off when a buyer asks “what happens if…?”

Note any packaging or handling steps you add to lift sample yield. Small fixes here—desiccant, tray, torque spec—often remove silent pain that kills schedules.

Present a calm, dated map

Show a tiny table with the three long-lead parts, current lead, target lead, sources, and next step with an owner. Add one dated win per row: “Alt sensor validated—Jan 12.”

Below the table, place a single sentence: “We can build 25 units in eight weeks with current sources; 8–10 weeks with either alt.” Simple, honest ranges read like control.

If your alternate strategy includes a novel adapter or tuning method, capture it and consider filing. Supply resilience that rests on a unique, practical method can be protectable and persuasive.

Pilot Deployment Metrics That Tell the Truth

A small pilot can prove more than a long lab cycle. The right metrics make a two-week run read like a clear “go” or “fix.” Keep them tied to daily work on the floor so the numbers feel real and travel well inside the buyer’s org.

Define the job and the guardrail first

Open with one sentence in the buyer’s words. “Cut re-teach minutes at changeover by half on Line 2.” This anchors every chart and removes debate.

Name the guardrail that keeps risk low. “Dry run before live motion; manual stop always available.” Guardrails make pilots easy to approve and keep data clean.

Freeze constraints: sensor, FPS, light, compute, and shift schedule. Write them on the first page of the pilot doc. Honest bounds make small wins believable.

Measure speed, safety, and stability

Track three clocks per shift: time-to-first-use, time saved per changeover, time-to-safe-resume after a stop. These clocks map to value and risk in plain terms.

Count guardrail events and the share that resolved without operator help. A high safe-resolution rate is a strong early signal even if a few defers happen.

Log “first-pass success” for each run on the pilot scene. First-pass says setup is right and defaults are working. Rising first-pass over the pilot is habit in motion.

Show cause and effect with dates

Add tiny labels on your trend lines when you ship a change mid-pilot. “Preset tweak Jan 13 → −7 min.” Dates turn improvements into proof, not luck.

Pair each label with a screenshot or 20-second clip in your appendix. Visuals end debates.

Close with a one-line next step that keeps the same guardrail. “Expand to two stations under same preset.” Calm, near moves keep momentum and shorten PO time.

Unit Economics Hints (Without a Big Model)

You do not need a full finance model to show healthy direction. A few simple ratios, measured the same way each week, can tell a strong story about cost, value, and scale.

Pick ratios that touch the job

Choose minutes saved per shift, support tickets per active station, and cloud/compute cost per hour of operation. These tie directly to what the buyer feels and what you will eventually price.

Use the same window for all ratios (per shift, per day, per week). Stability makes trends readable and avoids chart games.

Write the equation in tiny type on each chart. “Tickets/active = total tickets ÷ active stations.” Transparency builds trust.

Tie each slope to one change

When minutes saved jump, pin the release: “Auto-preset Jan 10.” When tickets fall, name the UX copy that removed confusion. When cost per hour drops, tag the caching or quantization step.

Move one lever at a time where possible. Single-variable changes are easy to explain and easy to repeat across sites.

Keep a one-line log beneath the charts with dates and deltas. These lines become your talking points in diligence.

Convert hints into price anchors

When minutes saved stabilise, set a pilot fee that is modest against that value (per line or per device). The ratio “fee : value” is your fairness story.

If cost per hour falls after an engineering change, note it in your margin notes. Early cost wins let you hold price later without pain.

If a unique method caused the cost drop (planner, cache, schedule), capture it as steps and consider filing. Protected efficiency is a moat that comp compounds.

Serviceability and MTTR (Mean Time to Repair)

Hardware wins or loses on downtime. At pre-seed, you can still show that fixes are fast, clear, and safe. MTTR and “no-tools” maintenance are quiet sales weapons.

Design for quick swaps and clear cues

List the top three field-replaceable parts (fan, cable, optics cover). Give each a color tag and a finger-screw if possible. Small design choices lower panic during a shift.

Add on-device cues: a light or message that names the fault in plain words (“Fan blocked,” not “Error 17”). Clarity turns a 20-minute chat into a 2-minute fix.

Ship a one-page “fix card” with photos. One step per line. One tool callout if needed. Your support volume will tell you if the card works.

Measure MTTR and “first-try” rate

Log the time from fault to functional state for each event. Separate “soft clears” (no parts) from “swap clears” (part replaced).

Track first-try success by part. If a part needs two attempts, the design or the card is wrong. Fix the flow, not just the ticket.

Report weekly MTTR with a small table: part, count, median minutes, first-try %. Highlight changes that moved the numbers.

Turn fixes into growth, not drag

When a swap step drops MTTR, bake that win into the rev and your BOM notes. Show the effect in your deck: “Optic cover redesign → MTTR 14 → 3 min.”

If you invented a clever latch, jig, or alignment trick, write it as a method and consider filing. Serviceability inventions are often protectable and valued by buyers.

Tie MTTR to retention in pilots. Faster fixes → more trust → more lines. This chain is a strong, simple seed story.

Certification Pre-Checks That Shorten Reviews

Formal marks take time. Pre-checks show the path is short and mapped. They also catch surprises early, when changes are cheap.

Map the standard to your boundary

List the exact clauses that apply to your device and use case. Note which ones you already meet by design (“no raw images stored,” “manual stop present”).

For each test, write the planned method and the rig you will use. Simple plans calm reviewers and help labs quote faster.

Add a one-line risk note: “EMI risk on motor harness—shielding in Rev C.” Owning risk beats hiding it.

Run small, honest pre-tests

Do a benchtop EMI sniff with known bad and known good scenarios. Log setup, distance, and readings.

Do a thermal soak under worst-case ambient for your wedge. Record peak temps and recovery time with and without the new fan curve.

Do a safety behavior check: trigger guards, confirm fallbacks, and show manual override. Clips here will cut days from approval threads.

Present a dated path to the mark

Build a tiny timeline: pre-checks done, lab booked, sample ship date, report target. Honest dates, not dreams.

Tie each closed gap to a design change with the rev noted. “Cable shield added—Rev C (Jan 18).”

If a compliance-friendly method is novel (sensor fusion that needs less shielding, for example), capture it and consider filing. Compliance that comes from invention is durable advantage.

A Two-Slide Summary You Can Paste in Your Deck

Partners and investors need a story they can retell without you. Two clean slides can carry your whole pre-seed metrics story.

Slide A — Proof under real limits

Top: one-line job in buyer words. Below: three small boxes for Pilot, Reliability, and Safety. Each has target, result, constraint, and one still image.

Add two tiny date labels where changes moved results. Keep labels literal: “Auto-preset Jan 13,” “Baffle Rev B Jan 21.”

Footer: a quiet method note—“Glare-safe preset; provisional filed.” Outcome meets moat in one breath.

Slide B — Path to scale and dollars

Left column: first-pass yield by batch with three dated process changes; MTTR mini-table showing a big drop on one part.

Right column: unit hints (minutes saved/shift, tickets/active, cost/hour), each with one lever tagged.

Bottom bar: two-week guarded pilot offer (scope, guardrail, metric, owner, start date) and a small supply readiness table (three long-leads, lead times, alternates).

Keep the appendix light and lethal

Append: one 20-second pilot clip, one reliability clip, one safety clip, one photo of the assembly jig with torque spec, one-page security/safety note, and your MOU/LOI excerpt (redacted).

Name files clearly and date them. “Pilot-Changeover-Clip-2025-01-15.mp4.”

Swap in fresher proof monthly; keep the frame the same so change stands out at a glance.

Field-Readiness Score (One Number to Rule the Noise)

Complex dashboards confuse. A simple composite shows direction. Keep it transparent so anyone can recalc it.

Define four equal parts

Use Activation-in-Pilot (first-pass %), Reliability (scenes passed), Manufacturability (FPY this batch), and Serviceability (MTTR vs. target). Each scored 0–100 with simple equations on a public sheet.

Equal weights keep arguments short at pre-seed. You can re-weight later, but parity now rewards balance.

Publish the formulas in the corner of your slide. Openness builds trust and kills “black-box score” debates.

Update weekly with the same inputs

Recompute each Friday from logs. Do not backfill or “clean” awkward weeks. Real bumps show you learn.

When you ship a change that moves one component, annotate the composite with the same date. Cause-and-effect becomes visible at a glance.

Freeze definitions for a month at a time to avoid “chart shopping.” Stability beats perfection.

Use the score to guide sprints

Pick the lowest sub-score and plan one five-day move to lift it. “MTTR low → redesign latch.” “Reliability low → dust baffle.”

Show last week’s score and this week’s score in standup. Small, steady gains keep morale up and meetings short.

When a method lifts the score twice, capture it and protect it. Now your composite is powered by assets you own.

Turn Metrics into a Calm Fundraising Story

Numbers alone do not close. Numbers plus simple words from the field do. Wrap your metrics in quiet, human lines that a busy partner can repeat.

Pair each chart with one raw quote

Place a line from a shift lead or lab tech near the relevant chart. “Dry run made it safe to try on shift.” Quotes anchor charts in reality.

Keep names and roles (with permission). Real voices beat polished taglines.

Use the same quotes in your one-pager and update emails. Repetition makes memory.

Always show dates and the next move

Every chart gets two tiny date labels where you shipped and saw a lift. “Preset Jan 13,” “Baffle Jan 21.” Dates create trust because they constrain the story.

Close each section with “Next:” and one small step you will ship in five days. You sound like a team in motion, not a team in wait.

Mirror these “Next” items in your weekly update so the dots line up across artifacts.

Tie your best lifts to owned methods

When a metric jump rests on a unique method—preset, planner, jig, latch—write the flow in plain steps and file a focused provisional.

Note it lightly on the slide: “Method protected.” Calm, not loud.

This link (lift → method → claim) is what lets you raise on your terms at seed.

Conclusion: Make Small Numbers Speak Loud

You can prove a lot before big runs. Use scenes that match the floor. Log what happens in clear words and simple clocks. Show how one small change moves one clean chart. When you do this each week, your story feels calm. Buyers see less risk. Partners see a plan. Investors see a path to dollars.

Turn every lift into a habit. Save presets. Add dry runs. Fix latches. Cut re-teach. Lower MTTR. Raise first-pass yield. Keep dates on every change. Keep one clip for every claim. When the same method wins twice—whether it is a glare-safe preset, a dust baffle, a jig, or an arbitration step—write the flow and protect it. Now your best results sit on assets you own.

Give yourself two weeks to prepare. Week one: freeze definitions, rerun your three field scenes, update reliability, yield, MTTR, and your unit hints, and record one 20-second clip per scene. Week two: lock a two-week guarded pilot offer, tighten your one-page safety and security note, and build the two slides that carry it all. Small, steady proof beats loud promise. That is how hardware raises well at pre-seed.

If you want a partner at your side, Tran.vc invests up to $50,000 in in-kind patent and IP services for robotics, AI, and deep tech teams. We help you pick the wedge, file around the engines that move your metrics, and package proof that travels. If that is your next step, apply anytime at https://www.tran.vc/apply-now-form/.